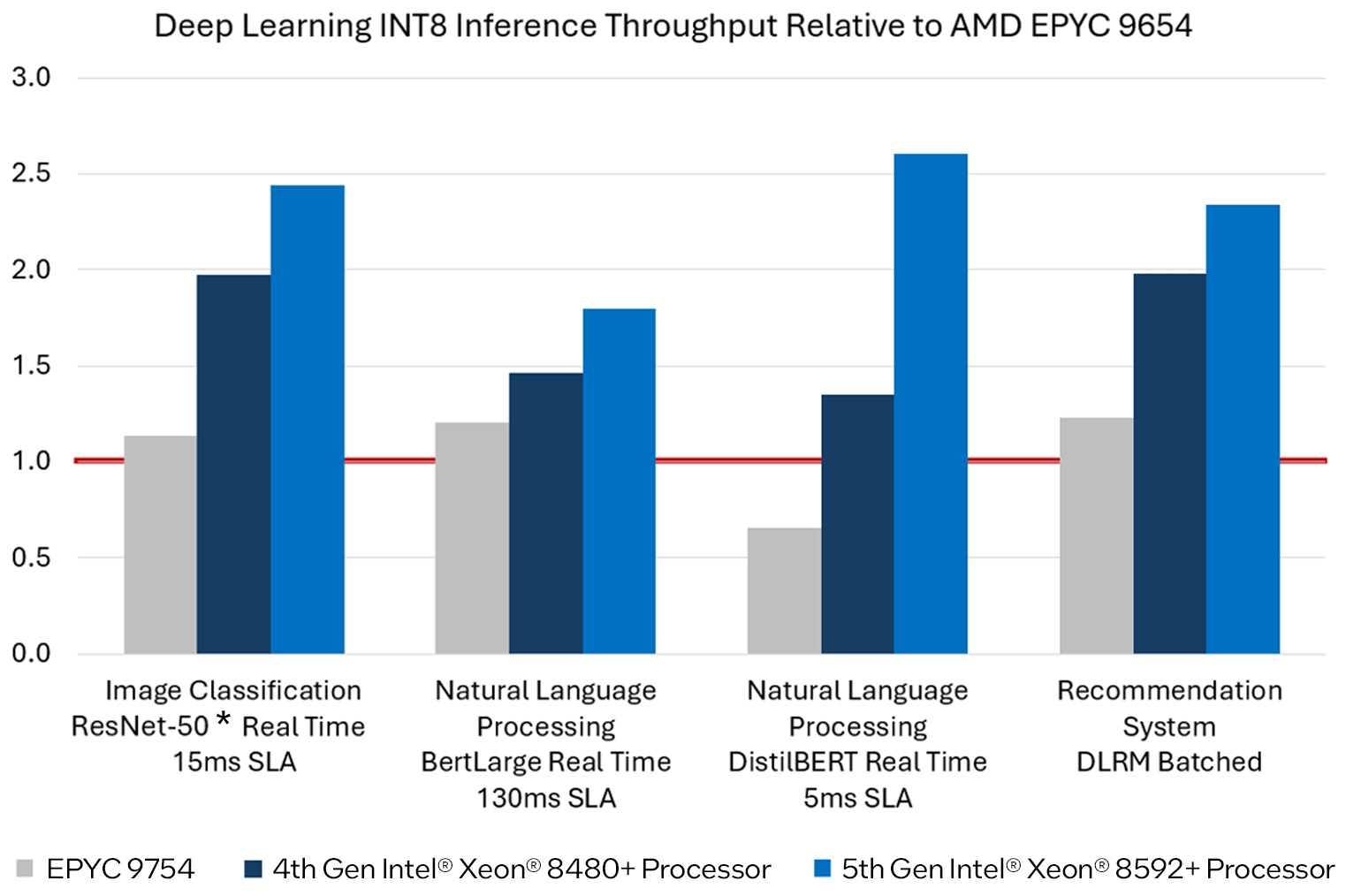

In a nutshell: Intel has refuted AMD’s claims that its 5th-gen Epyc ‘Turin’ CPUs offer faster AI processing than 5th-gen Xeon chips. According to Intel, its 64-core Xeon Platinum 8529+ processors can outperform AMD’s latest 128-core data center CPUs in the same workloads with the right optimizations.

Intel’s statement comes just days after AMD unveiled its 5th-gen Epyc CPUs with up to 195 Zen 5 cores at Computex 2024. Expected to be marketed as part of the Epyc 9005 family, the new lineup will offer a diverse range of processors designed for compute, cloud, telco, and edge workloads.

While the chips aren’t expected to hit the market until later this year, AMD showcased benchmarks suggesting they will be faster than Intel’s Emerald Rapids family in AI throughput workloads.

While AMD claimed that a pair of its Epyc Turin processors can be up to 5.4 times faster than a pair of Intel’s Xeon Platinum 8592+ CPUs when running a Llama 2-based chatbot, Intel says the benchmarks showcased by Team Red offer an unfair comparison. According to Intel, AMD did not disclose the software configuration used for these benchmarks, and its own testing shows the Xeon chips to be faster than the Epyc processors at the same task.

According to AMD’s benchmarks, two of its 5th-gen Epyc CPUs in a dual-socket configuration with 128 cores each offer up to 671 tokens per second of performance in Llama 2-7B, while Intel’s 5th-gen Xeon Platinum 8592+ chips with 64 cores running in a similar dual-socket mode offered just 125 tokens per second.

However, Intel repeated the same tests using its Intel Extension for PyTorch (P99 Latency), and the results were drastically different. In these tests, the Xeons’ 686 tokens per second output was 5.4 times faster than what AMD had showcased.

Intel also claimed that in Translation and Summarization workloads, its Xeon chips offered 1.2x and 2.3x faster performance compared to the benchmarks AMD showcased at Computex. AMD had claimed that Turin was 2.5x and 3.9x faster than the 5th-gen Xeon in these workloads.

It can be a little confusing to sift through the claims and counterclaims by the two chip majors, but the benchmarks provided by both are likely accurate, depending on how you look at it. While AMD’s tests are probably valid for a naive configuration of Llama-7B, Intel is well within its right to point out that nobody is likely to use its hardware without the Intel Extension for PyTorch, which offers a significant uplift in real-world performance for AI workloads.