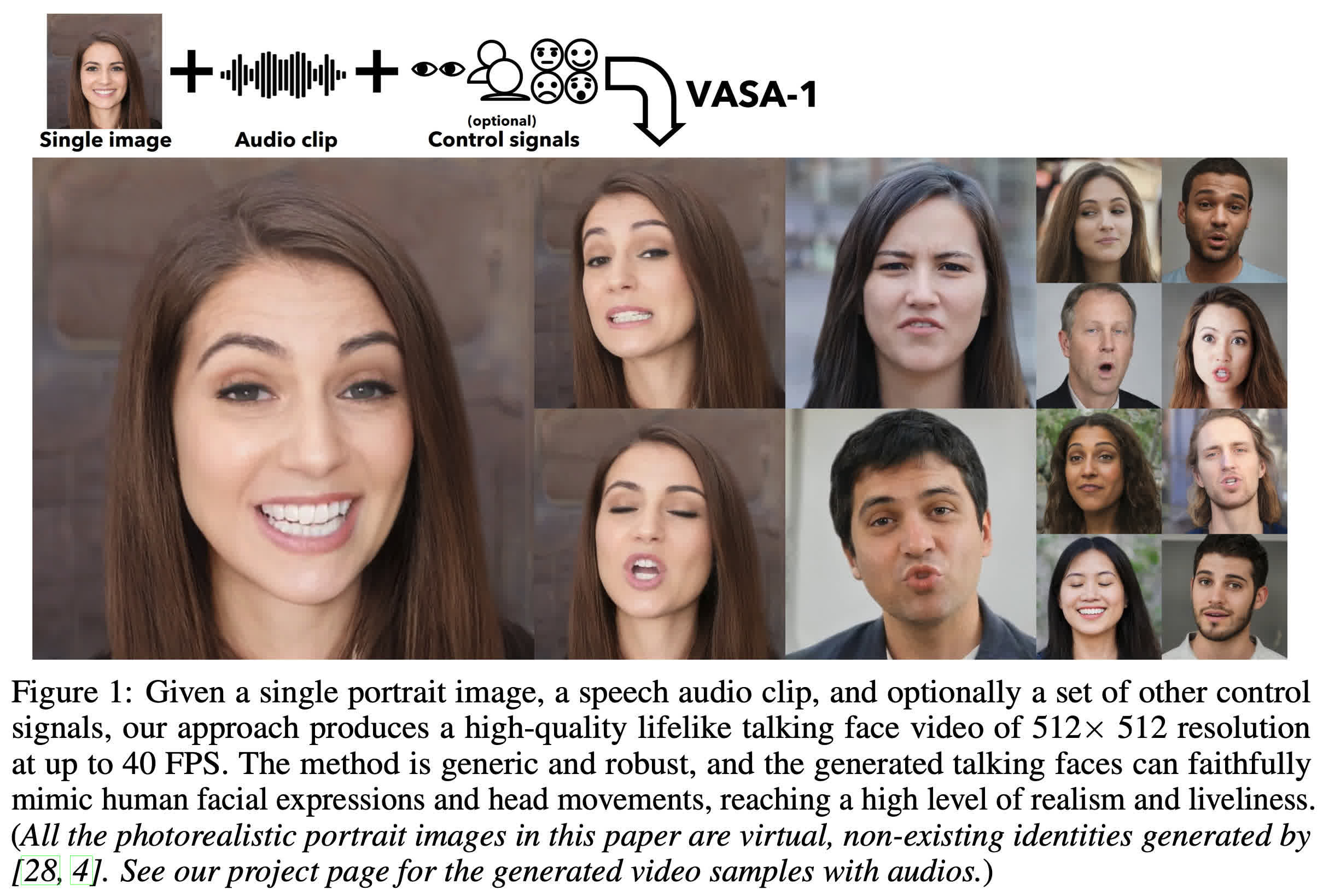

By the trying glass: Microsoft Analysis Asia has launched a white paper on a generative AI software it’s growing. This system is known as VASA-1, and it will probably create very practical movies from only a single picture of a face and a vocal soundtrack. Much more spectacular is that the software program can generate the video and swap faces in actual time.

The Visible Affective Abilities Animator, or VASA, is a machine-learning framework that analyzes a facial picture after which animates it to a voice, syncing the lips and mouth actions to the audio. It additionally simulates facial expressions, head actions, and even unseen physique actions.

Like all generative AI, it is not good. Machines nonetheless have hassle with wonderful particulars like fingers or, in VASA’s case, enamel. Paying shut consideration to the avatar’s enamel, one can see that they alter sizes and form, giving them an accordion-like high quality. It’s comparatively refined and appears to fluctuate relying on the quantity of motion happening within the animation.

There are additionally a number of mannerisms that do not look fairly proper. It is laborious to place them into phrases. It is extra like your mind registers one thing barely off with the speaker. Nevertheless, it is just noticeable beneath shut examination. To informal observers, the faces can move as recorded people talking.

The faces used within the researchers’ demos are additionally AI-generated utilizing StyleGAN2 or DALL-E-3. Nevertheless, the system will work with any picture – actual or generated. It might even animate painted or drawn faces. The Mona Lisa face singing Anne Hathaway’s efficiency of the “Paparazzi” tune on Conan O’Brien is hilarious.

Joking apart, there are reputable issues that dangerous actors may use the tech to unfold propaganda or try to rip-off folks by impersonating their relations. Contemplating that many social media customers put up footage of relations on their accounts, it will be easy for somebody to scrape a picture and mimic that member of the family. They may even mix it with voice cloning tech to make it extra convincing.

Microsoft’s analysis staff acknowledges the potential for abuse however doesn’t present an sufficient reply for combating it apart from cautious video evaluation. It factors to the beforehand talked about artifacts whereas ignoring its ongoing analysis and continued system enchancment. The staff’s solely tangible effort to forestall abuse will not be releasing it publicly.

“Now we have no plans to launch a web-based demo, API, product, extra implementation particulars, or any associated choices till we’re sure that the know-how will probably be used responsibly and in accordance with correct laws,” the researchers stated.

The know-how does have some intriguing and bonafide sensible purposes, although. One can be to make use of VASA to create practical video avatars that render domestically in real-time, eliminating the necessity for a bandwidth-consuming video feed. Apple is already doing one thing much like this with its Spatial Personas out there on the Imaginative and prescient Professional.

Try the technical particulars within the white paper publish on the arXiv repository. There are additionally extra demos on Microsoft’s web site.

:quality(85):upscale()/2023/06/12/759/n/24155406/ac6d52be64875255c23b50.75101623_.jpg?w=150&resize=150,150&ssl=1)