Refine your prompts utilizing the methods we have now outlined above and it’ll go a great distance in serving to AI do its job higher.

Generative AI chatbots could be serving to remodel the enterprise panorama, however they aren’t with out their issues. One in every of their most vital points is that once they’re requested a query, they current inaccurate or plain made-up info as if it’s appropriate – and the outcomes are amusing, to say the least. As an illustration, when somebody requested ChatGPT a ridiculous query about when the Golden Gate Bridge was transported throughout Egypt, it spouted a wholly unbelievable date of October 2016. These errors happen as much as 20% of the time and are often known as AI hallucinations. They occur when AI fashions are left with an excessive amount of freedom, thus ending with them offering inaccurate or contradicting info and main to moral considerations and distrust. So, can we make use of generative AI to get good-quality solutions? The reply is sure, and this text delves into particular methods to cut back these hallucinations – it’s all about how one asks the questions.

Avoiding vagueness and ambiguity

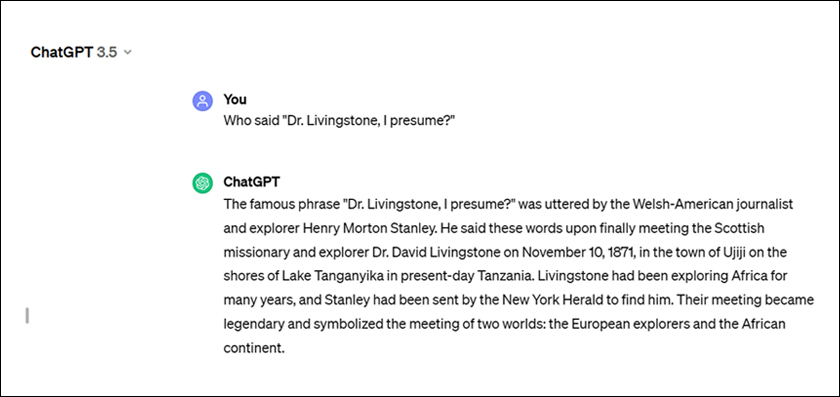

Curiously, AI doesn’t reply effectively to unprecise or unclear prompts. So, when prompts don’t present ample element, they offer AI the house to confabulate and fill within the particulars that it disregarded. As an illustration, when requested who stated, “Dr. Livingstone, I presume?” ChatGPT 3.5 instantly threw up this widely-known reply:

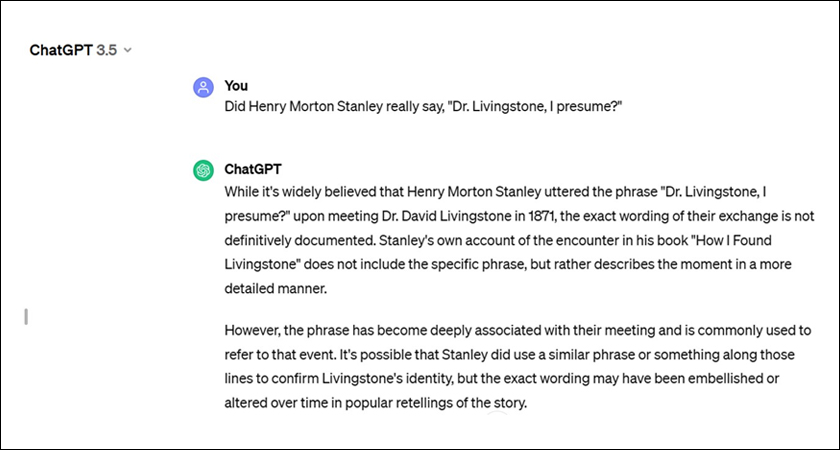

Nevertheless, after we particularly requested whether or not Henry Morton Stanley actually stated these phrases, that is what the reply was:

On this immediate, the anomaly was buried within the particulars. Whereas the primary immediate was open-ended, the second immediate requested for extra particular, verifiable info and thus returned a solution that was totally different from the primary one.

Experimenting with Temperature

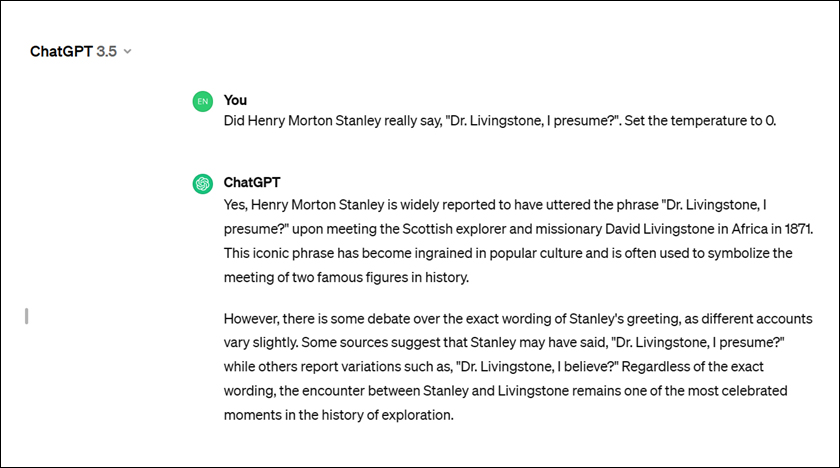

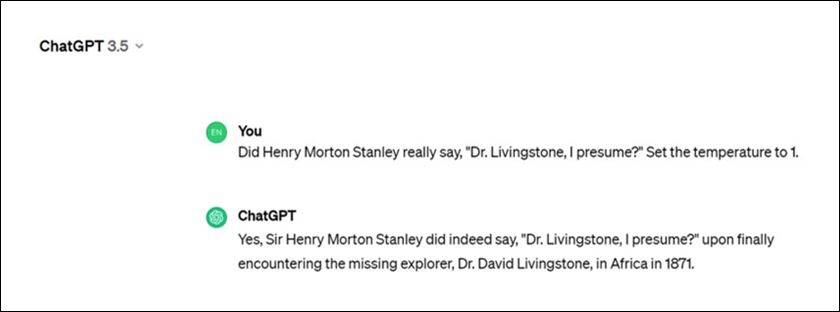

Temperature settings additionally play a large half in AI hallucinations. The decrease the temperature settings on a mannequin, the extra conservative and correct it’s. The upper its temperature setting, the extra the mannequin will get inventive and random with its solutions, making it likelier to “hallucinate.”

That is what occurred after we examined ChatGPT 3.5’s hallucinations with two totally different temperature settings. So, as you possibly can see, altering the temperature setting to 1 modified the mannequin’s reply, versus the identical query it was requested earlier with a temperature setting of 0.

Limiting the Potential Outcomes

Open-ended essays have a tendency to offer us way more freedom than multi-choice questions. Thus, the previous finally ends up creating random and possibly even inaccurate solutions; the latter mainly retains the right reply proper in entrance of you. Primarily, it properties in on the information that’s already “saved” in your mind, permitting you to make use of the method of elimination to infer the appropriate reply.

When speaking with the AI, You need to harness that “present” information.

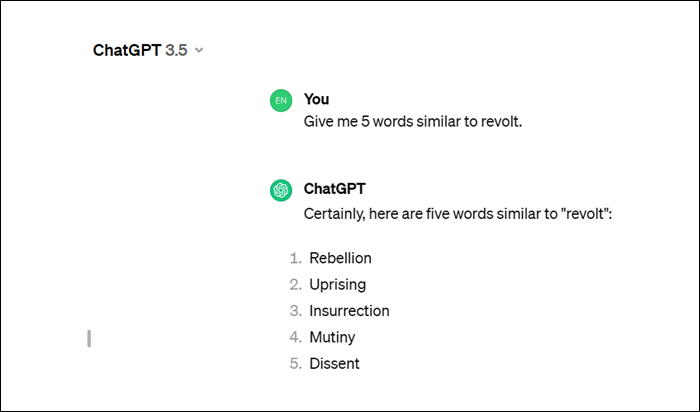

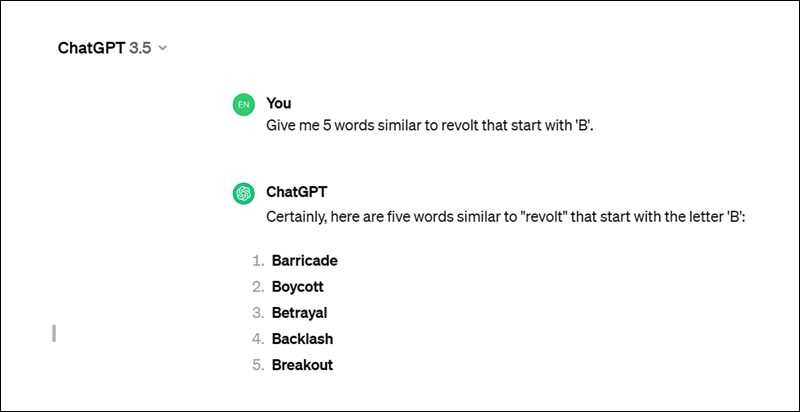

Take the above open-ended immediate, for instance. We tried to restrict the potential outcomes additional by specifying what phrases we wished in a second immediate under:

Since we wished phrases just like revolt beginning solely with a selected alphabet, we pre-emptively prevented receiving the data we didn’t want to with the second immediate. After all, chances are high that the AI may additionally get sloppy with its model of occasions, so asking it to exclude particular outcomes pre-emptively may get you nearer to the reality.

As somebody who enjoys science fiction to a fault, I really like every part, from alternate actuality to speculative eventualities. Nevertheless, prompts that merge unrelated ideas or combine universes, timelines, or realities throw AI right into a tizzy. So, if you’re making an attempt to get clear solutions from an AI, keep away from prompts that mix incongruent ideas or parts which may sound believable however should not. Since AI doesn’t truly know something about our world, it’ll basically attempt to reply in a approach that matches its mannequin. And if it will possibly’t achieve this utilizing precise info, then it’ll try to take action, leading to hallucinations and fabrications.

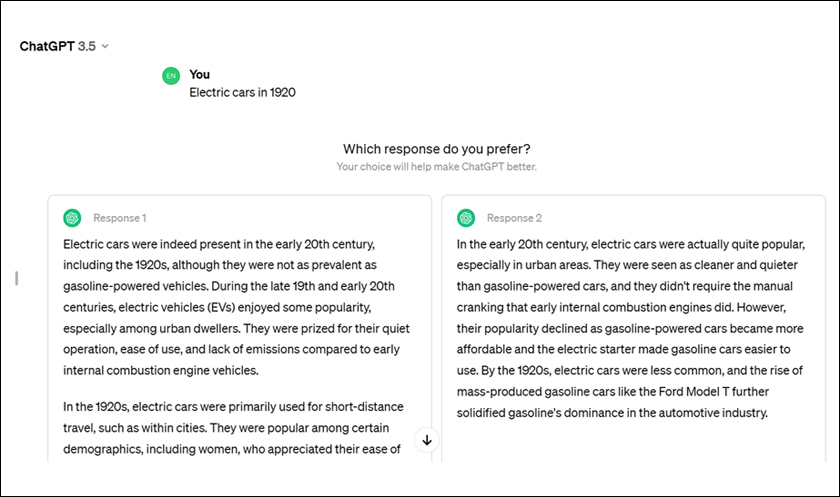

Nevertheless, there’s a catch. Check out this immediate, the place we requested about electrical automobiles in 1920. Whereas most would giggle it off, the primary electrical automobiles had been invented approach again within the 1830s, regardless of being a seemingly fashionable innovation. Moreover, the AI gave us two responses. Whereas the one on the left explains the idea intimately, the appropriate one, whereas concise, is deceptive regardless of being factually correct.

Confirm, confirm, confirm

Although AI has made large strides in the previous couple of years, it may develop into a comedy of errors as a result of its overzealous storytelling. AI analysis corporations corresponding to OpenAI are conscious about the problems with hallucinations and are constantly growing new fashions that require human suggestions to raised the entire course of. Even then, whether or not you’re utilizing AI to conduct analysis, clear up issues, and even write code, the purpose is to maintain refining your prompts utilizing the methods we outlined above, which can go a great distance in serving to AI do its job higher. And above all, you’ll at all times have to confirm every output.